In this second post I'll show a simple way to manage client code branches. (Well, simple compared with CVS and SVN).

In this example, I imagine that each client has their own custom changes to the core code base, and also packages that live in a client directory in their own part of the code tree.

I'll be assuming that you already have a repository to work with. In this case I have committed all the public releases of MySource Matrix (the CMS I use at work) to Git, creating a new branch for each public release.

We start at the prompt:

qed:~/mysource_matrix [master] $The current working copy is at the head of the master branch, which in this case contains release 3.18.2. The current branch name is shown in my prompt.

First, I'm going to change to the 3.16 branch of the repo and create a client branch. In Git this is done with the checkout command.

qed:~/mysource_matrix [master] $ git checkout v3.16

Checking out files: 100% (2426/2426), done.

Switched to branch "v3.16"

qed:~/mysource_matrix [v3.16] $We are now at the head of the 3.16 branch. The last version on this branch (in my case) is 3.16.8.

I'll create a client branch.

qed:~/mysource_matrix [v3.16] $ git checkout -b client

Switched to a new branch "client"

qed:~/mysource_matrix [client] $In the real world this would be a development repository, so there would be a lot of other commits and you'd likely base your branch off a release tag, rather than the head.

To keep things simple I am going to make two changes - one to an existing file, and add one new file.

The first alteration is to the CHANGELOG. I'll add some text after the title.

After making the change, we can see the status of Git:

qed:~/mysource_matrix [client] $ git status

# On branch client

# Changed but not updated:

# (use "git add ..." to update what will be committed)

#

# modified: CHANGELOG

#

no changes added to commit (use "git add" and/or "git commit -a") You can also see the changes with diff.

qed:~/mysource_matrix [client] $ git diff

diff --git a/CHANGELOG b/CHANGELOG

index a1240cb..54f5f50 100755

--- a/CHANGELOG

+++ b/CHANGELOG

@@ -1,4 +1,4 @@

-MYSOURCE MATRIX CHANGELOG

+MYSOURCE MATRIX CHANGELOG (Client Version)

VERSION 3.16.8

So I'll commit this change:

qed:~/mysource_matrix [client] $ git commit -a -m"changed title code in core"

Created commit 54a3c24: changed title code in core

1 files changed, 2 insertions(+), 2 deletions(-)(I'm ignoring the other change to the file - an extended character replacement that my editor seems to have done for me).

Now I'll add a file outside the core - one in our own package. Looking at git status:

qed:~/mysource_matrix [client] $ git status

# On branch client

# Untracked files:

# (use "git add ..." to include in what will be committed)

#

# packages/client/

nothing added to commit but untracked files present (use "git add" to track) We need to add this file to Git as it is not currently tracked:

qed:~/Downloads/mysource_matrix [client] $ git add packages/client/client_code.incAnd then commit it.

qed:~/mysource_matrix [client] $ git commit -m"added client package"

Created commit 82ac0ed: added client package

1 files changed, 5 insertions(+), 0 deletions(-)

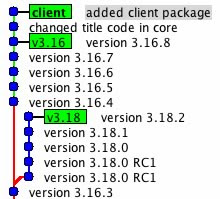

create mode 100644 packages/client/client_code.incLooking at gitk you can see that the client branch is now two commits ahead of the 3.16 branch.

We'll assume that this client code is now in production, and a new version of Matrix is released.

In order to move the 3.16 branch forward for this example, I'll add the latest release. First I'll checkout the code branch that we based our client branch on:

qed:~/mysource_matrix [client] $ gco v3.16

Switched to branch "v3.16"If you check in the working directory you'll see that any changes have gone. The working directory now reflects the state of the head of the new branch.

I'll extract the new code release over the top of the current working copy.

qed:~/$ tar xvf mysource_3-16-9.tar.gzIf we look at git status we can see some files changed and some files added.

qed:~/mysource_matrix [v3.16] $ git status

# On branch v3.16

# Changed but not updated:

# (use "git add ..." to update what will be committed)

#

# modified: CHANGELOG

# modified: core/assets/bodycopy/bodycopy_container/bodycopy_container.inc

# modified: core/assets/designs/design_area/design_area_edit_fns.inc

# SNIP: Full file list removed for brevity

# modified: scripts/add_remove_url.php

#

# Untracked files:

# (use "git add ..." to include in what will be committed)

#

# packages/cms/hipo_jobs/

# packages/cms/tools/

no changes added to commit (use "git add" and/or "git commit -a") Then add all changes and new files to the index ready to commit:

qed:~/mysource_matrix [v3.16] $ git add .I'll then commit them:

qed:~/Downloads/mysource_matrix [v3.16] $ git commit -m"v3.16.9"

Created commit ea7a183: v3.16.9

33 files changed, 1412 insertions(+), 110 deletions(-)

create mode 100755 packages/cms/hipo_jobs/hipo_job_tool_export_files.inc

create mode 100755 packages/cms/tools/tool_export_files/asset.xml

create mode 100755 packages/cms/tools/tool_export_files/tool_export_files.inc

create mode 100755 packages/cms/tools/tool_export_files/tool_export_files_edit_fns.inc

create mode 100755 packages/cms/tools/tool_export_files/tool_export_files_management.incLet's look at gitk again:

You can see that the 3.16 branch and the client branch have split - they both have changes that the other branch doesn't. (In practice this would be a series of commits and a release tag, although people using the GPL version could manage their local codebase exactly as I describe it here).

There are two ways to go at this point, rebase and merge.

Rebase

This can be used in a single local repository.Rebase allows us to reapply our branch changes on top of the 3.16 branch, effectively retaining our custom code. (The package code is not a problem as it only exists in our client branch.)

Rebase checks the code you are coming from and the code you are going to. If there is a conflict with any code you have changed on the branch, then you'll be warned that there is a clash to resolve.

Let's do the rebase:

qed:~/mysource_matrix [client] $ git rebase v3.16

First, rewinding head to replay your work on top of it...

HEAD is now at b67fef6 version 3.16.9

Applying changed title code in core

error: patch failed: CHANGELOG:1

error: CHANGELOG: patch does not apply

Using index info to reconstruct a base tree...

Falling back to patching base and 3-way merge...

Auto-merged CHANGELOG

Applying added client package

I'll walk though each step of the process:

qed:~/mysource_matrix [client] $ git rebase v3.16This is telling git to rebase the current branch on top of the head of the v3.16 branch.

First, rewinding head to replay your work on top of it...

HEAD is now at b67fef6 version 3.16.9git is changing the working copy (this is a checkout, and it shows the commit message).

Applying changed title code in core

error: patch failed: CHANGELOG:1

error: CHANGELOG: patch does not apply

Using index info to reconstruct a base tree...

Falling back to patching base and 3-way merge...

Auto-merged CHANGELOGThe next block is git re-applying the first commit you made on your client branch on top of the the checkout.

In this case git found the line we changed in the core and was able to auto-merge the change.

Applying added client packageThis last line was the second commit. As this contained new files not in the core, the patch was trivial.

This is what the rebase looks like:

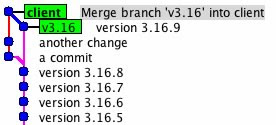

You can see that the branch appears to have been removed from the original branch point (release 3.16.8) and reconnected later (release 3.16.9).

Merge

Merge is the best option when you want to make the client branches and their histories available to other people. This would happen when there are multiple developers working in the same code.The following merges the current state of the 3.16 branch (release 3.16.9) into the client branch.

qed:~/mysource_matrix [client] $ git merge v3.16

Auto-merged CHANGELOG

Merge made by recursive.

CHANGELOG | 126 +++++

.../bodycopy_container/bodycopy_container.inc | 11 +-

.../designs/design_area/design_area_edit_fns.inc | 6 +-

SNIP: a bunch of file changes and additions

create mode 100755 scripts/regen_metadata_schemas.phpAll new files are pulled in, and any other changes are applied to existing files. Custom changes are retained, and conflicts are marked. This is what the merge looks like:

At this point you can retain the client branch and merge from 3.16.10 when it arrives (or indeed from 3.18 if you want).

If thing go wrong (there is a conflict) you'll get the change to resolve the conflict. Git will give a range of options. You'll need to resolve any conflicts.

A conflict will occur when the current branch and the merge target have the different changes on the same line (or lines). This can be manually resolved, and this ensures that your custom changes are retained (or updated).

Just released in git 1.5.6 is the ability to list branches that have been merged (and not merged) to the current branch. This would be useful to see what code has ended up in a client branch.

I'd suggest you view the gitcasts for rebase and merging, as these show how to resolve conflicts and some other advanced topics.

A few other points

1. You should not publish branches that have been rebased. The manpage is clear:"When you rebase a branch, you are changing its history in a way that will cause problems for anyone who already has a copy of the branch in their repository and tries to pull updates from you. You should understand the implications of using git rebase on a repository that you share."This might be a problem if many developers are sharing code from a 'master' git repository, and more than one need access to these branches. Better to use merge in these cases.

2. The repository I have shown here is somewhat subversion-esque in that there is a trunk and release branches. It would be just as simple to have the master branch being stable and containing tagged releases, with all the development and bug fixes being done on other branches. (Bugs fixes and development work are merged back into the stable release [master] branch). This is how many git repositories are set up, and this also is the way I tend to use it for new projects.

3. Because branching (and merging) is so simple in Git, I use it all the time. You can do all sort of useful things. For example, you could branch off a client branch, add some special feature, and then merged this back into any other branch - another client, release, or development. You could base a feature of a particular tag, merge it into two client branches, update it for PHP5, update the client branch packages for PHP5, merge in the feature and then merge in the upstream PHP5 changes.

SVN users have probably passed-out at this point.

Have fun.

One of the challenges with publishing news onto the Radio NZ site was getting the content from our internal news text system into our Content Management System.

One of the challenges with publishing news onto the Radio NZ site was getting the content from our internal news text system into our Content Management System.