About 6 years ago I had a conversion experience. This completely changed my world view and opened up new ways of thinking. I am talking about web standards, and my discovery of the work of Jeffrey Zeldman and others at the Web Standards Project.

Like many others at the time I became a zealot for the cause, and this inevitably led me down the path to other standards and the web accessibility initiative.

This all seemed like good sense at the time, and like most good followers of the cause I adhered to the published best practices espoused by the prophets.

Like most people, this is how I ran my web projects:

1. Specify the accessibility standards that have to be met (internally or by a contractor).

2. Schedule a testing phase at some point prior to launch, and perhaps commission a report.

3. Fix the things that didn't meet the standard, and;

4. Launch the site.

5. Job done.

Sadly, once any site is launched the second law of thermodynamics begins to do its work. This law states that all closed systems (those that don't have more energy applied to them from outside) tend to return to a state of chaos. Does that sound like most websites?

Without constant vigilance content becomes less relevant (or wrong), and markup gets less valid over time. From an accessibility point of view, attention to the details that matter (e.g. access keys, hidden headings, and general markup) will also tend to disperse, making for an inconsistent experience.

After the fourth iteration of the RNZ site was launched (the one you see today) I realised that we'd been doing things differently. No longer did we simply follow the 'recipes' advocated by others; those practices had become a way of life and we were developing our own ways of doing things. New content areas were being created, and we were thinking about accessibility issues without thinking about them. I thought we had accessibility sussed.

But earlier this year I got some feedback that completely changed my view. Someone had attended a session on screen readers run by the Royal NZ Foundation of the Blind, and had blogged about one small problem with our site. I was mortified. A problem with OUR site?

The problem was that links in the Features section of the home page did not make sense to screen readers. The links following each feature had the text "Find out more", and a screen reader navigating the page would hear a series of links read thus:

link: Find out more

link: Find out more

link: Find out more

Not very helpful, so we added hidden text to each link:

Find out more about Podcast Classics

Find out more about Enzology

The extra text sits in span with a CSS class that removes it from the visual layout.

We've made the use of hidden text standard practice on the site. There are already many hidden headings to say what each section of the page is for: Main navigation list, current location in site, Menu, secondary navigation, and so on, so this was a simple change.

This was a wake up call for me, and highlights a reality: your accessibility effort is never complete.

For our next project Radio NZ is developing a Javascript framework to use as the basis of a new audio player. Our aim is to make something that is simple to use and is highly accessible for those with screen readers. This will be free software and is being developed as an open source project so others can use what we build and contribute.

A few weeks ago I subscribed to the Assistive Technology Interest Group run by the Royal NZ Foundation of the Blind. I did this to enlist the help of the community who'd have to use the player, and for whom it is probably most important to get it right.

One of the benefits of going direct (instead of commissioning a report) is that a conversation can develop. The player can (and will) be fine-tuned through many iterations based on the detailed feedback that comes from talking with people. Compared with the usual build it, test it, fix it approach, I think this process will create a better outcome for everyone.

I have been humbled by the helpfulness of people on the list, and their willingness to explain things that are obvious to them, but not to me. I have also benefited from reading conversations about other access issues.

While on the list I've found that the community is changing all the time - for example new technologies like ARIA are being developed and implemented. People are finding better ways to do things. My expectations of what can now be achieved have gone up.

Where to from here ?

My experience has raised some interesting questions. Why is it that most web projects to do not talk directly to their end users? Or if they do, it is via a third party?

If you were born in or before the 60s think back to when you were a child and see if this rings true. Recall the times when you ever saw a person with a disability in the street. You'd never seen anyone like this before, and being curious, you take a good look. Your parent sees you looking and tells you not to stare. For my generation disability was a thing you hid away and did not discuss.

I shared these thoughts at a recent talk I gave, and there were a lot of heads nodding. For the response I got, I suspect that may people don't engage with the disabled because they are embarrassed and have no idea where to start.

I was talking this over with my wife (a teacher) and she pointed out that while that may be true for our generation (born early 60s), our children (born in the 90s) do not have this problem. The disabled are not hidden away, but attend the same schools as everyone else. They have the same opportunities. There is a different view of 'normal'.

This might be the reason why my generation is less willing to engage with the disabled, and will hire a consultant instead.

That is not to say that you should not hire a consultant; a good one will guide you through the process of making your site accessible, and help you put in place systems to ensure it stays that way. A great consultant will help you to communicate with you end-users on an on-going basis.

To summarise: accessibility is not an box to tick. It is not an event. It is not even a process. It is a conversation, a conversation that has to involve those whom your site exists to serve.

If you have never spent time with people who use assistive technologies to access your site, now is the time to start.

Tuesday, December 16, 2008

Tuesday, December 9, 2008

Follow the mouse cursor with jQuery

At the moment on Radio New Zealand This Way Up is running a feature on keeping bees in your backyard. One of the ideas our web producer Dempsey Woodley came up with for the Bee pages was a bee chasing the cursor around the screen.

The pair of us hunted for code to do this, but most of it seems to have been designed when Netscape 4 was still a dominant force in the browser market. The web also seems to have moved on from such effects.

The first couple Dempsey tried locked the bee image to the cursor, and did not really give a sense of being chased. Then he found the Mouse Squidie effect from Javascript-FX. Perfect.

Two problems though - it did not work in IE 6+, and was not configurable without hacking into the code.

So I have rewritten the thing from scratch based on the original algorithm, and using jQuery to replace the old library functions. I also added a bunch of configuration options so that the effect can be fine tuned.

You can see the new version of the script on the Bee chased page. Like most effects of this type, a little goes a long way.

It's up on github.

The pair of us hunted for code to do this, but most of it seems to have been designed when Netscape 4 was still a dominant force in the browser market. The web also seems to have moved on from such effects.

The first couple Dempsey tried locked the bee image to the cursor, and did not really give a sense of being chased. Then he found the Mouse Squidie effect from Javascript-FX. Perfect.

Two problems though - it did not work in IE 6+, and was not configurable without hacking into the code.

So I have rewritten the thing from scratch based on the original algorithm, and using jQuery to replace the old library functions. I also added a bunch of configuration options so that the effect can be fine tuned.

You can see the new version of the script on the Bee chased page. Like most effects of this type, a little goes a long way.

It's up on github.

Tuesday, November 18, 2008

A Free and Open Source Audio Player

I am proud to announce Radio New Zealand's first free software project.

The project is a set of modular tools that we'll be using to build new audio functionality for the Radio NZ website. The project is hosted on GitHub, which we will use to (we hope) embrace the open source development model.

The first module (available now) is an audio player plugin based on the jQuery JavaScript library, and version 0.1 already has some interesting features.

It can play Ogg files natively in Firefox 3.1 using the audio tag. It can also play MP3s using the same javascript API - you just load a different filename and the player works out what to do. The project includes a basic flash-based MP3 player, and some example code to get you started.

The audio timer and volume readouts for the player are updated via a common set of events, so they work for both types of audio, and you can swap freely between them.

At the moment there is limited error checking, and obviously lots of room for improvements and enhancements.

One of these will be a playlist module, and this is something we are going to fund for our own use.

An interesting angle to the project is that we've already started to talk with the blind community to ensure that the player is usable for people with screen readers. The first phase of this is to test the mark-up for the audio player page to ensure it makes sense.

Phase two will be checking that the basic functionality is simple to use using screen reader and browser hotkeys, and phase three will test playlist manipulation.

I am excited about the project for two reasons. Firstly, we use a lot of free and open source software at Radio NZ, but apart from some bug fixes and minor patches we've not yet been a contributor to the free software commons.

Secondly, I see this as a chance to lift the bar for accessible interface engineering using just HTML and JavaScript. We chose to not use a full flash-based interface - a common approach these days - because it simplifies the building and maintenance of the player to some extent. It also lowers the cost of development, while building on well-understood accessibility techniques.

Let's see how it goes...

The project is a set of modular tools that we'll be using to build new audio functionality for the Radio NZ website. The project is hosted on GitHub, which we will use to (we hope) embrace the open source development model.

The first module (available now) is an audio player plugin based on the jQuery JavaScript library, and version 0.1 already has some interesting features.

It can play Ogg files natively in Firefox 3.1 using the audio tag. It can also play MP3s using the same javascript API - you just load a different filename and the player works out what to do. The project includes a basic flash-based MP3 player, and some example code to get you started.

The audio timer and volume readouts for the player are updated via a common set of events, so they work for both types of audio, and you can swap freely between them.

At the moment there is limited error checking, and obviously lots of room for improvements and enhancements.

One of these will be a playlist module, and this is something we are going to fund for our own use.

An interesting angle to the project is that we've already started to talk with the blind community to ensure that the player is usable for people with screen readers. The first phase of this is to test the mark-up for the audio player page to ensure it makes sense.

Phase two will be checking that the basic functionality is simple to use using screen reader and browser hotkeys, and phase three will test playlist manipulation.

I am excited about the project for two reasons. Firstly, we use a lot of free and open source software at Radio NZ, but apart from some bug fixes and minor patches we've not yet been a contributor to the free software commons.

Secondly, I see this as a chance to lift the bar for accessible interface engineering using just HTML and JavaScript. We chose to not use a full flash-based interface - a common approach these days - because it simplifies the building and maintenance of the player to some extent. It also lowers the cost of development, while building on well-understood accessibility techniques.

Let's see how it goes...

Thursday, November 13, 2008

Equal Height Columns with jQuery

We are about to change to jQuery from Mootools, so I'm in the process of porting our current javascript functions. I'll do a separate post later on why we are changing.

In the meantime here is the code for equal height columns:

Hat tip

In the meantime here is the code for equal height columns:

jQuery.fn.equalHeights=function() {

var maxHeight=0;

this.each(function(){

if (this.offsetHeight>maxHeight) {maxHeight=this.offsetHeight;}

});

this.each(function(){

$(this).height(maxHeight + "px");

if (this.offsetHeight>maxHeight) {

$(this).height((maxHeight-(this.offsetHeight-maxHeight))+"px");

}

});

};It is called like this:$("#cont-pri,#cont-sec,#hleft,#hright,#features,#snw").equalHeights();The jQuery function returns an object containing only the elements found, so you can include identifiers that are not on every page and the function still works. On the RNZ site there are some divs on the home page that do not appear on other pages, but require the equal heights treatment. This means the call above works on any page without generating errors.Hat tip

Friday, November 7, 2008

How to test for audio tag support in your browser

I am writing a small application at the moment that needs audio tag support for Ogg in Firefox 3.1.

The following is the snippet of code I'm using to test this. (I am using the jQuery library).

There may also need to be a check that the browser actually has the codec installed. (Add a comment if this is the case, and I'll update this post when I work out how).

Update (19 Nov 2008): A better way is to add a check for the Mozilla browser into the test. At the moment, Safari has the audio tag, but only supports media types that work in Quicktime.

The following is the snippet of code I'm using to test this. (I am using the jQuery library).

audio_elements = $('audio');

if('volume' in audio_elements[0] ) {

// processing here

}This assumes that there is only one audio element on the page (in my case I dynamically insert one). If the volume property exists, I assume that the element can be used to playback Ogg Vorbis files.There may also need to be a check that the browser actually has the codec installed. (Add a comment if this is the case, and I'll update this post when I work out how).

Update (19 Nov 2008): A better way is to add a check for the Mozilla browser into the test. At the moment, Safari has the audio tag, but only supports media types that work in Quicktime.

if('volume' in audio_elements[0] && $.browser.mozilla)A new function has been added to the HTML5 draft spec - canPlayType() - that gets around these problems.

Thursday, October 23, 2008

Javascript console syntax error on Doctype

I just solved an interesting problem.

There was a Doctype syntax error in the console.

This was caused by an invalid javascript file included on the page - invalid meaning there is no file in the src tag, or the file does not end in .js.

The fix was to remove the CMS code that was inserting an empty javascript tag with no src file.

Tricky to track down, simple to fix.

There was a Doctype syntax error in the console.

This was caused by an invalid javascript file included on the page - invalid meaning there is no file in the src tag, or the file does not end in .js.

The fix was to remove the CMS code that was inserting an empty javascript tag with no src file.

Tricky to track down, simple to fix.

Monday, October 20, 2008

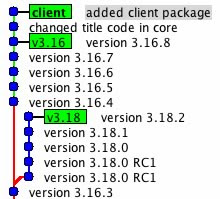

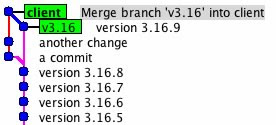

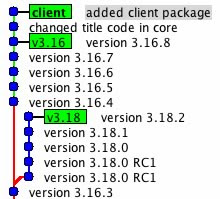

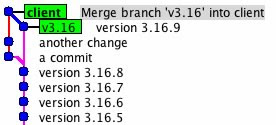

Converting from CVS or SVN to Git

This post is a collection of notes about moving from CVS or Subversion (SVN) to Git.

Over the last 9 months all my projects have moved to Git. Previously I've used SVN, but found creating and merging branches (which in theory looks like the Right Way to Work) to be pretty painful.

Imagine that you have 10 developers working on a project in CVS/SVN. A typical workflow is as follows:

1. A new set of features is assigned to 5 of the programmers. The other 5 are working on bug fixes.

2. Each programmer checks out the current head of the trunk and starts work on their bug or feature.

3. Everyone works on code locally until their work is complete, but NO-ONE commits anything as they work, because that would put the head in an unstable state. Work is only committed when it is complete.

4. The first bug fix is complete and is committed.

5. The first feature is committed.

6. Unit tests are run, and all seems well.

7. The second bug fix is committed.

8. The second feature is committed.

9. The unit tests fail horribly and the application appears to be broken in 10 places.

10. The four programmers who made the last 4 commits have a meeting to see what went wrong. As a result the head is frozen while one of them works out how to fix all the problems.

11. While the head is frozen, everyone else continues to code against the checkout they have (now 4 commits ago) while the current batch of problems are sorted out.

12. The code at the head is fixed, and the next feature is committed.

13. This feature causes more unit tests to fail, and re-breaks 3 of the bugs that have just been fixed.

14. The head is frozen again while the current crop of bugs are fixed.

The above is a amalgam of stories I have heard, and appears to be quite typical in many shops using CVS/SVN.

There are many negative things about this workflow:

1. When features and bugs are committed, they can create more bugs if they clash with other features that have already landed on the trunk.

2. Commits can cause complex bugs because of amount of code in a commit is large.

3. All the work that went into a bug or feature is contained in one commit. If a feature took 5 days of work, this is a lot of change for a commit and can make it harder to identify the point that something when wrong.

4. The inability to commit (i.e. save) work in progress tends to reduce experimentation.

There is also the problem of merging. Nearly everyone I know says that it takes a lot of time to plan a merge, and in practice many people avoid branching as a result.

Source code management systems should not create extra work.

While on a branch, the programmer can make commits as they work. They can branch from the branch to try an experiment. They can roll-back to any previous state. They can even go back to the trunk (master) and do a quick bug fix, before returning to their current work.

The whole point of source code management is to capture work in a progressive manner at a granularity that is useful for understanding the evolution of a feature, and to help in tracking bugs down. (The bisect feature in Git is great to find the point where code broke).

When the new feature is complete, the programmer can rebase their work off the head of the master branch (the trunk). A rebase takes the current (feature) branch and all its commits and moves it to somewhere else. If you rebase a branch off the main truck of code, it is the same as if you had made the branch off the current head, rather than 20 commits back, which is where you started.

This enables local testing to take place just before the new feature is merged back into the trunk.

Practically speaking, a programmer making a new feature would do the following. I'll include the Git commands required, and I'll assume that the programmer already has a local copy of a remote master repository.

1. Create a new branch

git checkout -b new-feature

(-b creates the named branch)

2. Start work. Create a new function as part of the feature. Commit the function.

git commit -m "This function is to add some stuff to blah"

3. Rebase (the master branch has had 3 commits since work started).

To do this they switch back to master

git checkout master

Then changes from the master repository are pulled and merged locally.

git pull

Then checkout the feature branch

git checkout new-feature

The rebase it.

git rebase master

(There are faster ways using fewer commands to do this, but I have broken it out so you can follow the logic.)

4. The programmer then runs locally the unit test for the module he is working on.

5. The unit test fails. Because the change made in the commit is quite small it takes only a few minutes to find the problem. The bug is fixed and committed.

git commit -m "Fixed bug caused by changes in module Y"

6. The cycle above continues until the feature is done.

7. The programmer then switches back to the master branch

git checkout master

8. And merges in the changes.

git merge new-feature

9. All unit tests are then run locally. The code passes so the changes can be pushed to the main repository.

git push

The main repository now has the new feature, AND any other changes committed by other programmers, and assuming they used the same process, the HEAD is now in a working condition.

The git workflow avoids all of the problems that arise from working in isolation, and the single large commits. It allow much greater flexibility to experiment, and to test changes against the current trunk at any stage.

It also means that the evolution of all features is available in the repository.

The slow way is to use an adaptor like cvsimport or git-svn.

Personally, I would recommend cold-turkey.

Migration to a remote server

Project and server migration

Linus Torvalds on Git

In this video Linus Torvalds explains the rationale behind Git, and why the distributed model works better than other models.

Randal Schwartz on Git

Randal Schwartz explains the inner workings of Git.

Git with Rails

Ryan Bates shows how to use Git with a simple rails project. This is useful to see how easy it is to use.

On OSX

On Windows

There is also a guide for svn deserters.

Those of you who are used to CVS/SVN may not see the point of the second of these; once you start using Git you will be making and merging branches all over the place, and the prompt is a great reminder of where you are. The prompt that comes with Git also shows when you are part way through a merge or rebase (i.e. you have unresolved conflicts).

You can use gitk to view the repository (or gitx for OSX).

If there are other resources that readers find useful put them in the comments and I'll add them to this post.

Over the last 9 months all my projects have moved to Git. Previously I've used SVN, but found creating and merging branches (which in theory looks like the Right Way to Work) to be pretty painful.

Why Change ?

"If it ain't broke don't fix it" is the most common reason to not change, closely followed by the lost productivity during the change over. Even given these factors, if you closely examine the workflow that older repositories force business into, the benefits of change become obvious.Imagine that you have 10 developers working on a project in CVS/SVN. A typical workflow is as follows:

1. A new set of features is assigned to 5 of the programmers. The other 5 are working on bug fixes.

2. Each programmer checks out the current head of the trunk and starts work on their bug or feature.

3. Everyone works on code locally until their work is complete, but NO-ONE commits anything as they work, because that would put the head in an unstable state. Work is only committed when it is complete.

4. The first bug fix is complete and is committed.

5. The first feature is committed.

6. Unit tests are run, and all seems well.

7. The second bug fix is committed.

8. The second feature is committed.

9. The unit tests fail horribly and the application appears to be broken in 10 places.

10. The four programmers who made the last 4 commits have a meeting to see what went wrong. As a result the head is frozen while one of them works out how to fix all the problems.

11. While the head is frozen, everyone else continues to code against the checkout they have (now 4 commits ago) while the current batch of problems are sorted out.

12. The code at the head is fixed, and the next feature is committed.

13. This feature causes more unit tests to fail, and re-breaks 3 of the bugs that have just been fixed.

14. The head is frozen again while the current crop of bugs are fixed.

The above is a amalgam of stories I have heard, and appears to be quite typical in many shops using CVS/SVN.

There are many negative things about this workflow:

1. When features and bugs are committed, they can create more bugs if they clash with other features that have already landed on the trunk.

2. Commits can cause complex bugs because of amount of code in a commit is large.

3. All the work that went into a bug or feature is contained in one commit. If a feature took 5 days of work, this is a lot of change for a commit and can make it harder to identify the point that something when wrong.

4. The inability to commit (i.e. save) work in progress tends to reduce experimentation.

There is also the problem of merging. Nearly everyone I know says that it takes a lot of time to plan a merge, and in practice many people avoid branching as a result.

Source code management systems should not create extra work.

The Git Workflow

Contrasting the above, Git allows for simple branching and merging, making it trivial to work on new features and bugs in a temporary branch. It also makes it simpler to manage existing stable, development and maintenance branches.While on a branch, the programmer can make commits as they work. They can branch from the branch to try an experiment. They can roll-back to any previous state. They can even go back to the trunk (master) and do a quick bug fix, before returning to their current work.

The whole point of source code management is to capture work in a progressive manner at a granularity that is useful for understanding the evolution of a feature, and to help in tracking bugs down. (The bisect feature in Git is great to find the point where code broke).

When the new feature is complete, the programmer can rebase their work off the head of the master branch (the trunk). A rebase takes the current (feature) branch and all its commits and moves it to somewhere else. If you rebase a branch off the main truck of code, it is the same as if you had made the branch off the current head, rather than 20 commits back, which is where you started.

This enables local testing to take place just before the new feature is merged back into the trunk.

Practically speaking, a programmer making a new feature would do the following. I'll include the Git commands required, and I'll assume that the programmer already has a local copy of a remote master repository.

1. Create a new branch

git checkout -b new-feature

(-b creates the named branch)

2. Start work. Create a new function as part of the feature. Commit the function.

git commit -m "This function is to add some stuff to blah"

3. Rebase (the master branch has had 3 commits since work started).

To do this they switch back to master

git checkout master

Then changes from the master repository are pulled and merged locally.

git pull

Then checkout the feature branch

git checkout new-feature

The rebase it.

git rebase master

(There are faster ways using fewer commands to do this, but I have broken it out so you can follow the logic.)

4. The programmer then runs locally the unit test for the module he is working on.

5. The unit test fails. Because the change made in the commit is quite small it takes only a few minutes to find the problem. The bug is fixed and committed.

git commit -m "Fixed bug caused by changes in module Y"

6. The cycle above continues until the feature is done.

7. The programmer then switches back to the master branch

git checkout master

8. And merges in the changes.

git merge new-feature

9. All unit tests are then run locally. The code passes so the changes can be pushed to the main repository.

git push

The main repository now has the new feature, AND any other changes committed by other programmers, and assuming they used the same process, the HEAD is now in a working condition.

The git workflow avoids all of the problems that arise from working in isolation, and the single large commits. It allow much greater flexibility to experiment, and to test changes against the current trunk at any stage.

It also means that the evolution of all features is available in the repository.

How to Change to Git

There are two ways: cold turkey or slowly. If you go cold-turkey it has to be on a new project (easy), or you have to convert your old repository (harder).The slow way is to use an adaptor like cvsimport or git-svn.

Personally, I would recommend cold-turkey.

SVN Cold Turkey Links

Basic MigrationMigration to a remote server

Project and server migration

CVS Cold-Turkey Links

CVS to Git transition guideUnderstanding Git

I'd recommend watching the following videos before starting to use git.Linus Torvalds on Git

In this video Linus Torvalds explains the rationale behind Git, and why the distributed model works better than other models.

Randal Schwartz on Git

Randal Schwartz explains the inner workings of Git.

Git with Rails

Ryan Bates shows how to use Git with a simple rails project. This is useful to see how easy it is to use.

Installing Git

On GNU/Linux (from source)On OSX

On Windows

Learning Git

To learn how to use git, the best videos around are on gitcasts. To get started view the first 4 videos in the basic usage section.There is also a guide for svn deserters.

Tools to help using Git

The best tools are built right in, you just have to know where to find them. My personal favourites are command line auto-completion and showing the branch in the prompt. I have blogged about this previously.Those of you who are used to CVS/SVN may not see the point of the second of these; once you start using Git you will be making and merging branches all over the place, and the prompt is a great reminder of where you are. The prompt that comes with Git also shows when you are part way through a merge or rebase (i.e. you have unresolved conflicts).

You can use gitk to view the repository (or gitx for OSX).

If there are other resources that readers find useful put them in the comments and I'll add them to this post.

Thursday, September 25, 2008

Native Ogg playback with Firefox 3.1

I have added a new Javascript function to the Radio NZ site called Oggulate.

This function parses through pages that have Ogg download links and replaces them with a Play/Pause button. The button plays (and pauses) the Ogg file using Firefox 3.1's built in support for Ogg Vorbis.

The Oggulator can be activated by installing a small bookmarklet:

javascript:oggulate();

Clicking on the bookmarklet runs the function and gives you a nice page full of buttons to play Ogg. You will need one of the latest Firefox 3.1 builds for this to work.

The functionality in the script is rudimentary, and 3.1 is still in development, so don't expect it to be perfect. (I notice there is often a delay before playback starts for the first clip you play on a page, and I have had a few crashes and lock-ups).

This feature of the RNZ site is experimental at the moment, so I'm not able to offer support. It is primarily so people can test Firefox.

If anyone wants to add features or improve the function, I'll upload it (after reviewing the changes) so everyone can access them. webmaster at radionz dot co dot nz.

(NB: As at 2013 this feature is no longer supported.)

This function parses through pages that have Ogg download links and replaces them with a Play/Pause button. The button plays (and pauses) the Ogg file using Firefox 3.1's built in support for Ogg Vorbis.

The Oggulator can be activated by installing a small bookmarklet:

javascript:oggulate();

Clicking on the bookmarklet runs the function and gives you a nice page full of buttons to play Ogg. You will need one of the latest Firefox 3.1 builds for this to work.

The functionality in the script is rudimentary, and 3.1 is still in development, so don't expect it to be perfect. (I notice there is often a delay before playback starts for the first clip you play on a page, and I have had a few crashes and lock-ups).

This feature of the RNZ site is experimental at the moment, so I'm not able to offer support. It is primarily so people can test Firefox.

If anyone wants to add features or improve the function, I'll upload it (after reviewing the changes) so everyone can access them. webmaster at radionz dot co dot nz.

(NB: As at 2013 this feature is no longer supported.)

Wednesday, September 10, 2008

Clearing the Cache on Matrix

Here's the problem:

We run a master/slave server pair. Each has a web server and database server. The master is accessed via our intranet, and is where we do all our editing and importing of content. This is replicated to the public slave servers.

The slave server has a Squid reverse proxy running in front of it to cushion the site against large peaks in traffic. These peaks occur when our on-air listeners are invited to go to the site to get some piece of information related to the current programme. The cache time in Matrix (and therefore in Squid) is 20 minutes.

The database is replicated with Slony, while the filesystems is syncronised with a custom tool based on rsync.

If we update content it can take up to 20 minutes for that content to show on the public side of the site. This is a problem when we want to do fast updates, especially for news content.

We've looked at a number of solutions, but none quite do what we wanted.

In a stand-alone (un-replicated) system clearing the cache is simple. There is a trigger in Matrix called Set Cache Expiry, that allows you to expire the Matrix cache early. This works OK on a single server system but not if you have a cluster and use Squid. The main issue in that case is that even though the trigger is syncronous, the clearing is not. If Slony has a lot of work to do, there is still a chance that the expiry date has passed before the asset is actually updated on the slave.

A clearing system needs to be 100% predictable, which led me to devise an alternative solution.

We needed to do three things:

a) Determine when changes made on the Master have been replicated to the Slave.

b) Collect ids of assets that are changed and the pages they appear on.

c) Clear the assets collected in b) when we know a).

This is how we do it.

a) There are two queries that can be run on the Master database to get this information:

psql -U postgres -h server_name db_name -qAtc "SELECT st_last_event FROM _replication.sl_status"

returns a sequence number which represent where the Slony master is currently at.

psql -U postgres -h server_name db_name -qAtc "SELECT st_last_received FROM _replication.sl_status

returns the sequence number where the slave is up to.

If you grab the master sequence number after a content change (a database query), you can tell when that change has reached the slave when it's sequence number is the same or greater.

b) We have a script that imports news items to matrix. One of the attributes in the imported data is a list of asset ids that are affected by the import action. We know in advance what asset lists and pages the content will show on.

When the script runs it collects these for each imported asset, and compiles a list of asset ids (with no duplicates).

c) This is how it is bolted together.

After some assets have been imported, the import script calls a second script which adds the items to a queue:

system( 'perl add_to_cache_queue.pl --assets="' . $asset_list . '"' );

This script is short, so I'll reproduce it here.

The queue itself is Perl's IPC::DirQueue, a very cool module for managing a filesystem-based queue.

When an item is found on the queue it checks the Slony sequence number that was saved with the data. If the number has passed on the slave, then yet another script is run, but this time on the slave (public) server.

php ./matrixFlushCache.php --site="/path/to/site/radionz" --assets="comma_sep_list"

This last script resolves the asset id numbers in Matrix to a list of file system cache buckets and URLs. The cache buckets are removed, and the URLs are also cleared from the Squid cache. The cache is them primed with the new page. The script was written by Colin Macdonald.

This is what the output looks like:

** lock gained Sat Aug 30 20:03:01 2008 running jobs: * * * * ?

Slony slave is at 485328, Got a job at: 485327

data:200,1585920,1584495,764616,etc

Clearing cache for #200

Unlinking cache/1313/e8f12c0d7b5889d748872bdad215c0cf

Unlinking cache/1113/aaa1666c26af81a0b044ab2fecb950ae

Deleting DB records (2 reported, 2 deleted)

.

snip a bunch of the same but for different assets.

.

Refreshing urls:

http://www.radionz.co.nz/ ... 200

http://www.radionz.co.nz/home ... 200

http://www.radionz.co.nz/news/business ... 200

http://www.radionz.co.nz/news/business/ ... Skipped

snip a bunch of URLs

- lock released

The script is looping and checking every three seconds for a new job (queued asset ids to clear). The * means it checked for a queued job and none was found. The ? means that a job was found but that the slave had a lower sequence number than the one stored with the job.

The top 5 stories (the ones on the home page) are also cleared and refreshed.

The Flush cache has a URL filter so you can exclude certain URLs from being flushed - an example is the query ridden script kiddie hacks that people try to run against sites. There is no point in re-caching those.

Another is URLs ending in /. In our case this mostly means the someone has deleted the story off the end of the URLs to see what they get, so there is no reason to refresh these either.

A feature that I'm working on will clear just the Matrix and Squid caches for the non-front page stories. These all have a 2 minute expiry time and if we expire all the caches the end user will re-prime the cache. There is no performance hit in doing this as the browser and squid come back for these pages every two minutes anyway.

The system I have just outlined allows us to remotely add and update items in Matrix and for those changes to appear on the site within 5 minutes. I hope someone finds this useful.

We run a master/slave server pair. Each has a web server and database server. The master is accessed via our intranet, and is where we do all our editing and importing of content. This is replicated to the public slave servers.

The slave server has a Squid reverse proxy running in front of it to cushion the site against large peaks in traffic. These peaks occur when our on-air listeners are invited to go to the site to get some piece of information related to the current programme. The cache time in Matrix (and therefore in Squid) is 20 minutes.

The database is replicated with Slony, while the filesystems is syncronised with a custom tool based on rsync.

If we update content it can take up to 20 minutes for that content to show on the public side of the site. This is a problem when we want to do fast updates, especially for news content.

We've looked at a number of solutions, but none quite do what we wanted.

In a stand-alone (un-replicated) system clearing the cache is simple. There is a trigger in Matrix called Set Cache Expiry, that allows you to expire the Matrix cache early. This works OK on a single server system but not if you have a cluster and use Squid. The main issue in that case is that even though the trigger is syncronous, the clearing is not. If Slony has a lot of work to do, there is still a chance that the expiry date has passed before the asset is actually updated on the slave.

A clearing system needs to be 100% predictable, which led me to devise an alternative solution.

We needed to do three things:

a) Determine when changes made on the Master have been replicated to the Slave.

b) Collect ids of assets that are changed and the pages they appear on.

c) Clear the assets collected in b) when we know a).

This is how we do it.

a) There are two queries that can be run on the Master database to get this information:

psql -U postgres -h server_name db_name -qAtc "SELECT st_last_event FROM _replication.sl_status"

returns a sequence number which represent where the Slony master is currently at.

psql -U postgres -h server_name db_name -qAtc "SELECT st_last_received FROM _replication.sl_status

returns the sequence number where the slave is up to.

If you grab the master sequence number after a content change (a database query), you can tell when that change has reached the slave when it's sequence number is the same or greater.

b) We have a script that imports news items to matrix. One of the attributes in the imported data is a list of asset ids that are affected by the import action. We know in advance what asset lists and pages the content will show on.

When the script runs it collects these for each imported asset, and compiles a list of asset ids (with no duplicates).

c) This is how it is bolted together.

After some assets have been imported, the import script calls a second script which adds the items to a queue:

system( 'perl add_to_cache_queue.pl --assets="' . $asset_list . '"' );

This script is short, so I'll reproduce it here.

#!/usr/local/bin/perlA second script runs on the machine as a worker process, watching the queue. This uses the loop code I outlined in my last post.

# This script is used to add items to the DirQueue on the current machine

# it is for testing purposes

use strict;

use Getopt::Long;

use DirQueue;

use lib ".";

my $assets_to_clear = '';

GetOptions( "assets=s" => \$assets_to_clear );

if( $assets_to_clear eq '' ){

exit(1);

}

my $command = 'psql -U postgres -h host db -qAtc "SELECT st_last_event FROM _replication.sl_status"';

my $last_event = `$command`;

$last_event =~ s/\n//;

print "Last event: $last_event\n";

# a queue to add items to. Locks can last for 2 minutes

my $string = " this is a test string add to the file at " . time ."\n";

my $dq = DirQueue->new({ dir => "matrix-cache-queue",data_file_mode => 0777, active_file_lifetime => 120 });

if( $dq->enqueue_string ($assets_to_clear, { 'id' => $last_event, 'time' => localtime(time)} ) ){

exit 0;

}

print "could not queue file";

exit 1;

The queue itself is Perl's IPC::DirQueue, a very cool module for managing a filesystem-based queue.

When an item is found on the queue it checks the Slony sequence number that was saved with the data. If the number has passed on the slave, then yet another script is run, but this time on the slave (public) server.

php ./matrixFlushCache.php --site="/path/to/site/radionz" --assets="comma_sep_list"

This last script resolves the asset id numbers in Matrix to a list of file system cache buckets and URLs. The cache buckets are removed, and the URLs are also cleared from the Squid cache. The cache is them primed with the new page. The script was written by Colin Macdonald.

This is what the output looks like:

** lock gained Sat Aug 30 20:03:01 2008 running jobs: * * * * ?

Slony slave is at 485328, Got a job at: 485327

data:200,1585920,1584495,764616,etc

Clearing cache for #200

Unlinking cache/1313/e8f12c0d7b5889d748872bdad215c0cf

Unlinking cache/1113/aaa1666c26af81a0b044ab2fecb950ae

Deleting DB records (2 reported, 2 deleted)

.

snip a bunch of the same but for different assets.

.

Refreshing urls:

http://www.radionz.co.nz/ ... 200

http://www.radionz.co.nz/home ... 200

http://www.radionz.co.nz/news/business ... 200

http://www.radionz.co.nz/news/business/ ... Skipped

snip a bunch of URLs

- lock released

The script is looping and checking every three seconds for a new job (queued asset ids to clear). The * means it checked for a queued job and none was found. The ? means that a job was found but that the slave had a lower sequence number than the one stored with the job.

The top 5 stories (the ones on the home page) are also cleared and refreshed.

The Flush cache has a URL filter so you can exclude certain URLs from being flushed - an example is the query ridden script kiddie hacks that people try to run against sites. There is no point in re-caching those.

Another is URLs ending in /. In our case this mostly means the someone has deleted the story off the end of the URLs to see what they get, so there is no reason to refresh these either.

A feature that I'm working on will clear just the Matrix and Squid caches for the non-front page stories. These all have a 2 minute expiry time and if we expire all the caches the end user will re-prime the cache. There is no performance hit in doing this as the browser and squid come back for these pages every two minutes anyway.

The system I have just outlined allows us to remotely add and update items in Matrix and for those changes to appear on the site within 5 minutes. I hope someone finds this useful.

Friday, August 29, 2008

Opening up old content and more Ogg Vorbis

Just today we completed work on the Saturday Morning with Kim Hill programme archive.

This opens up all the content that has been broadcast on the show since the start of the year.

All audio can be downloaded in MP3 format, and in Ogg Vorbis since August. The programme archive page lists a summary of all programmes, and you can also search audio and text.

Morning Report and Nine To Noon now have Ogg Vorbis, and I expect to be able to open up their older content in the next few weeks.

Other programmes will have Ogg Vorbis added as I have time.

I have a test RSS feed (the URL might change) with Ogg enclosures for Saturday Morning. Send any feedback to webmaster at radionz dot co dot nz.

This opens up all the content that has been broadcast on the show since the start of the year.

All audio can be downloaded in MP3 format, and in Ogg Vorbis since August. The programme archive page lists a summary of all programmes, and you can also search audio and text.

Morning Report and Nine To Noon now have Ogg Vorbis, and I expect to be able to open up their older content in the next few weeks.

Other programmes will have Ogg Vorbis added as I have time.

I have a test RSS feed (the URL might change) with Ogg enclosures for Saturday Morning. Send any feedback to webmaster at radionz dot co dot nz.

Wednesday, August 27, 2008

Creating a Pseudo Daemon Using Perl

One of the trickier software jobs I've worked on is a Perl script that runs almost continuously.

The script was needed to check a folder for new content (in the form of news stories) and process them.

The stories are dropped into a folder as a group via ftp, and the name of each story is written into a separate file. The order in this file is the order the stories need to appear on the website.

The old version of the script ran by cron every minute, but there were three problems.

The first is that you might have to wait a whole minute for content to be processed, which is not really ideal for a news service.

The second is that should the script be delayed for some reason, it is possible to end up with a race condition with two (or more scripts) trying to process the same content.

The third is that the script could start reading the order file before all the files were uploaded.

In practice 2 and 3 were very rare, but very disruptive when they did occur. The code needed to avoid both.

The new script is still run once per minute via cron, but contains a loop which allows it to check for content every three seconds.

It works this way:

1. When the script starts it grabs the current time and tries to obtain an exclusive write lock on a lock file.

2. If it gets the lock it starts the processing loop.

3. When the order file is found the script waits for 10 seconds. This is to allow any current upload process to complete.

4. It then reads the file, deletes it, and starts processing each of the story files.

5. These are all written out to XML (which is imported into the CMS), and the original files are deleted.

6. When this is done, the script continues to look for files until 55 seconds have elapsed since it started.

7. When no time is left it exits.

This is what the loop code looks like:

This is the output of the script (each number is the name of a job - we have three jobs, each for one ftp directory:

You can see that the script found some content part way through its cycle, and ran over the allotted time, so the next run of the script did not get a full minute to run.

This ensures that there is never a race between two scripts. You can see next happens when a job take more than 2 minutes:

All the scripts that were piling up waiting to get the lock exited immediately, once they found that there time was up.

I am using the same looping scheme to process a queue elsewhere in our publishing system and I'll explain this in my next post.

The script was needed to check a folder for new content (in the form of news stories) and process them.

The stories are dropped into a folder as a group via ftp, and the name of each story is written into a separate file. The order in this file is the order the stories need to appear on the website.

The old version of the script ran by cron every minute, but there were three problems.

The first is that you might have to wait a whole minute for content to be processed, which is not really ideal for a news service.

The second is that should the script be delayed for some reason, it is possible to end up with a race condition with two (or more scripts) trying to process the same content.

The third is that the script could start reading the order file before all the files were uploaded.

In practice 2 and 3 were very rare, but very disruptive when they did occur. The code needed to avoid both.

The new script is still run once per minute via cron, but contains a loop which allows it to check for content every three seconds.

It works this way:

1. When the script starts it grabs the current time and tries to obtain an exclusive write lock on a lock file.

2. If it gets the lock it starts the processing loop.

3. When the order file is found the script waits for 10 seconds. This is to allow any current upload process to complete.

4. It then reads the file, deletes it, and starts processing each of the story files.

5. These are all written out to XML (which is imported into the CMS), and the original files are deleted.

6. When this is done, the script continues to look for files until 55 seconds have elapsed since it started.

7. When no time is left it exits.

This is what the loop code looks like:

my $stop_time = time + 55;

my $loop = 1;

my $locking_loop = 1;

my $has_run = 0;

while( $locking_loop ){

# first see if there is a lock file and

# wait till the other process is done if there is

if( open my $LOCK, '>>', 'inews.lock' ){

flock($LOCK, LOCK_EX) or die "could not lock the file";

print "** lock gained " . localtime(time) . " running jobs: " if ($debug);

while( $loop ){

my $job_count = keys(%jobs);

for my $job (1..$job_count){

# run the next job if we are within the time limit

if( time < $stop_time ){ $has_run ++; print "$job "; process( $job ); sleep(3); } else{ $loop = 0; $locking_loop = 0; } } } close $LOCK; print "- lock released\n" } else{ print "Could not open lock file for writing\n"; $locking_loop = 0; # nothing happens here } unless($has_run){ print localtime(time) . " No jobs processed\n" } }

This is the output of the script (each number is the name of a job - we have three jobs, each for one ftp directory:

** lock gained Tue Jul 22 08:17:00 2008running jobs:1 2 3 1 2 3 1 2 3 1 2 3 1 2 3 1 2 3 1 - lock released

** lock gained Tue Jul 22 08:18:00 2008 running jobs: 1 2 3 1 2 3 1 2 3 1

24 news files to process

* Auditor-General awaits result of complaint about $100,000 donation

* NZ seen as back door entry to Australia

.

snip

.

- lock released

** lock gained Tue Jul 22 08:19:23 2008 running jobs: 1 2 3 1 2 3 1 2 3 1 2 - lock released You can see that the script found some content part way through its cycle, and ran over the allotted time, so the next run of the script did not get a full minute to run.

This ensures that there is never a race between two scripts. You can see next happens when a job take more than 2 minutes:

- lock released

** lock gained Tue Jul 22 10:46:32 2008 running jobs: - lock released

Tue Jul 22 10:46:32 2008 No jobs processed

** lock gained Tue Jul 22 10:46:32 2008 running jobs: - lock released

Tue Jul 22 10:46:32 2008 No jobs processed

** lock gained Tue Jul 22 10:46:32 2008 running jobs: - lock released

Tue Jul 22 10:46:32 2008 No jobs processed

** lock gained Tue Jul 22 10:46:32 2008 running jobs: 1 2 3 1 2 3 1 2All the scripts that were piling up waiting to get the lock exited immediately, once they found that there time was up.

I am using the same looping scheme to process a queue elsewhere in our publishing system and I'll explain this in my next post.

Friday, August 15, 2008

Using Git to manage rollback on dynamic websites

Page rollback is useful for archival and legal reasons - you can go back and see a page's contents at any point in time. It is also a life-saver if some important content gets accidentally updated - historical content is just a cut and paste away. The MediaWiki software the runs Wikipedia is a good example of a system with rollback.

There are several methods available to a programmer wanting to enable a rollback feature on a Content Management System.

The simplest way to do this is store a copy of every version of the saved page, and maintain a list of pointers to the most recent pages. It would also be possible to store diffs in the database - old versions are saved as a series of diffs against the current live page.

A useful feature would be the ability to view a snapshot of the entire site at any point in time. This is probably of greatest interest to state-owned companies and Government departments who need to comply with legislation like New Zealand's Public Records Act.

A database-based approach would be resource intensive - you'd have to get all the required content, and then there is the challenge to display an alternative version of the site to one viewer while others are still using the latest version.

I was thinking of alternatives to the above, and wondered if a revision control system might be a more efficient way to capture the history, and to allow viewing of the whole site at points in time.

Potentially this scheme could be used with any CMS, so I thought that I'd document it in case someone finds it useful.

Git has several features that might help us out:

Cryptographic authentication

According to the Git site: "The Git history is stored in such a way that the name of a particular revision (a "commit" in Git terms) depends upon the complete development history leading up to that commit. Once it is published, it is not possible to change the old versions without it being noticed. Also, tags can be cryptographically signed."

This is a great choice from a legal perspective.

Small Storage Requirements

Again, from the site: "It also uses an extremely efficient packed format for long-term revision storage that currently tops any other open source version control system".

Josh Carter has some comparisons and so does Pieter de Biebe.

Overall it looks as though Git does the best job of storing content, and because it is only storing changes it'll be more efficient that saving each revision of a page in a database. (Assuming that is how it is done.)

And of course Git is fast, although we are only using commit and checkout in this system.

Wiring it Up

To use Git with a dynamic CMS, there would need to a save hook that did the following.

When a page is saved:

1. Get the content from the page being saved and the URL path.

2. Save the content and path (or paths) to a queue.

3. The queue manager would take items off the queue, write them to the file system and commit them.

These commits are on top of an initial save of the whole site, whatever that state may be. The CMS would need a feature that outputs the current site, or perhaps a web crawler could be used.

To view a page in the past, it is a simple matter to checkout the commit you want and view the site as static pages via a web server. Because every commit is built on top of previous changes, the whole site is available as it was when a particular change was made.

The purpose of the queue manager is to allow commits to be suspended so that you can checkout an old page, or for maintenance. Git gc could be run each night via cron while commits were suspended. I'd probably use IPC::DirQueue because it is fast, stable, and allows multiple processes to add items to the queue (and take them off), so there won't be any locking or race issues.

Where the CMS is only managing simple content - that is, there are no complex relationships between assets such as nesting, or sharing of content - this scheme would probably work quite well.

There are problems though when content is nested (embedded) or shared with other pages, or is part of an asset listing (a page that display the content of other items in the CMS).

If an asset is nested inside another asset the CMS would need to know about this relationship. If the nested asset is saved then any assets it appears inside need to be committed too, otherwise the state of content in Git will not reflect what was on the site.

I'd expect a linked tree of content use would be implemented to manage intra-page relationships and provide the information about which pages need to be committed.

This is all theoretical, but feel free to post any comments to extend the discussion.

There are several methods available to a programmer wanting to enable a rollback feature on a Content Management System.

The simplest way to do this is store a copy of every version of the saved page, and maintain a list of pointers to the most recent pages. It would also be possible to store diffs in the database - old versions are saved as a series of diffs against the current live page.

A useful feature would be the ability to view a snapshot of the entire site at any point in time. This is probably of greatest interest to state-owned companies and Government departments who need to comply with legislation like New Zealand's Public Records Act.

A database-based approach would be resource intensive - you'd have to get all the required content, and then there is the challenge to display an alternative version of the site to one viewer while others are still using the latest version.

I was thinking of alternatives to the above, and wondered if a revision control system might be a more efficient way to capture the history, and to allow viewing of the whole site at points in time.

Potentially this scheme could be used with any CMS, so I thought that I'd document it in case someone finds it useful.

Git has several features that might help us out:

Cryptographic authentication

According to the Git site: "The Git history is stored in such a way that the name of a particular revision (a "commit" in Git terms) depends upon the complete development history leading up to that commit. Once it is published, it is not possible to change the old versions without it being noticed. Also, tags can be cryptographically signed."

This is a great choice from a legal perspective.

Small Storage Requirements

Again, from the site: "It also uses an extremely efficient packed format for long-term revision storage that currently tops any other open source version control system".

Josh Carter has some comparisons and so does Pieter de Biebe.

Overall it looks as though Git does the best job of storing content, and because it is only storing changes it'll be more efficient that saving each revision of a page in a database. (Assuming that is how it is done.)

And of course Git is fast, although we are only using commit and checkout in this system.

Wiring it Up

To use Git with a dynamic CMS, there would need to a save hook that did the following.

When a page is saved:

1. Get the content from the page being saved and the URL path.

2. Save the content and path (or paths) to a queue.

3. The queue manager would take items off the queue, write them to the file system and commit them.

These commits are on top of an initial save of the whole site, whatever that state may be. The CMS would need a feature that outputs the current site, or perhaps a web crawler could be used.

To view a page in the past, it is a simple matter to checkout the commit you want and view the site as static pages via a web server. Because every commit is built on top of previous changes, the whole site is available as it was when a particular change was made.

The purpose of the queue manager is to allow commits to be suspended so that you can checkout an old page, or for maintenance. Git gc could be run each night via cron while commits were suspended. I'd probably use IPC::DirQueue because it is fast, stable, and allows multiple processes to add items to the queue (and take them off), so there won't be any locking or race issues.

Where the CMS is only managing simple content - that is, there are no complex relationships between assets such as nesting, or sharing of content - this scheme would probably work quite well.

There are problems though when content is nested (embedded) or shared with other pages, or is part of an asset listing (a page that display the content of other items in the CMS).

If an asset is nested inside another asset the CMS would need to know about this relationship. If the nested asset is saved then any assets it appears inside need to be committed too, otherwise the state of content in Git will not reflect what was on the site.

I'd expect a linked tree of content use would be implemented to manage intra-page relationships and provide the information about which pages need to be committed.

This is all theoretical, but feel free to post any comments to extend the discussion.

Saturday, August 9, 2008

Setting up Ogg Vorbis Coding for MP2 Audio Files

Today on Radio New Zealand National, Kim Hill interviewed Richard Stallman on the Saturday Morning programme. Prior to the interview Richard requested that the audio be made available in Ogg Vorbis format, in addition to whatever other formats we use.

At the moment our publishing system generates audio in Windows Media and MP3 formats, so I had two options: generate the Ogg files by hand on the day, or add an Ogg Vorbis module to do it automatically.

Since the publishing system is based on free software (Perl), it was a simple matter to add a new function to our existing custom transcoder module. It also avoided the task of manually coding and uploading each file. Here is the function:

Once this code was in place, the only other change was to update the master programme data file to switch on the new format.

After that, any audio published to the Saturday programme would automatically get the new file format, and this would also be uploaded to our servers.

The system was originally built with this in mind - add a new type, update the programme data, done - but this is the first time I have used it.

You can see the result on today's Saturday page.

At the moment our publishing system generates audio in Windows Media and MP3 formats, so I had two options: generate the Ogg files by hand on the day, or add an Ogg Vorbis module to do it automatically.

Since the publishing system is based on free software (Perl), it was a simple matter to add a new function to our existing custom transcoder module. It also avoided the task of manually coding and uploading each file. Here is the function:

All the Ogg parameters are hard-coded at this stage, and I'll add code to allow for different rates later, once I have done some tests to see what works best for our purposes.

sub VORBIS()

{

my( $self ) = shift;

my( $type ) = TR_256;

my $inputFile = $self->{'inputFile'};

my $basename = $self->{'basename'};

my $path = $self->{'outputPath'};

my $ext = '.ogg';

my $output_file = "$path\\$basename$ext";

my $lame_decoding_params = " --mp2input --decode $inputFile - ";

my $ogg_encoding_params = qq{ - --bitrate 128 --downmix --quality 2 --title="$self->{'title'}" --artist="Radio New Zealand" --output="$output_file" };

my $command = "lame $lame_decoding_params | oggenc2 $ogg_encoding_params";

print "$command \n" if ($self->{'debug'});

my $R = $rates{$type};

&RunJob( $self, $command, "m$rates{$type}" );

# we must return a code so the caller knows what to do

return 1;

}

Once this code was in place, the only other change was to update the master programme data file to switch on the new format.

After that, any audio published to the Saturday programme would automatically get the new file format, and this would also be uploaded to our servers.

The system was originally built with this in mind - add a new type, update the programme data, done - but this is the first time I have used it.

You can see the result on today's Saturday page.

Monday, July 28, 2008

News Categories at Radio NZ

We've just completed a major update to the news section of the site that allows us to categorise news content.

We use the MySource Matrix CMS, and categorising content is a trivial exercise using the built in asset listing pages. The tricky part in our case is that none of our news staff actually write their content directly in the CMS, or even have logins. Why?

When we first started using Matrix, the system's functionality was much more limited than it is today (in version 3.18.3). For example, paint layouts - which are used to style news items - had not yet been added to the system.

The available features were perfectly suitable for the service that we were able to offer at that time though.

The tool used by our journalists is iNews - a system optimised for processing news content in a large news environment - and it was significantly faster than Matrix for this type of work (as it should be). Because staff were already adept at using it, we decided to use iNews to edit and compile stories and export the result to the CMS.

This would also mean that staff didn't have to know any HTML, and we could add simple codes to the text to allow headings and other basic web formatting. It would also dramatically simplify initial and on-going training.

The proposed process required two scripts. The first script captured the individual iNews stories, ordered them, converted the content to HTML, and packaged them with some metadata into an XML file. (iNews can output a group to stories via ftp with a single command).

The XML was copied to the CMS server where script 2 imported the specified content.

Each block of stories was placed in a folder in the CMS. The most recent folder represented the current publish, and was stamped with the date and time. The import script then changed the settings on the site's home page headline area, the RSS news feed and the News home to list the stories in this new folder.

Stories appeared on the site as they were ordered in the iNews system, and the first five were automatically displayed on the home page.

On the site, story URLs looked like this:

www.radionz.co.nz/news/200807221513/134e5321

and each new publish replaced the any previous versions:

www.radionz.co.nz/news/200807221545/2ed5432

On the technical side, the iNews processing script ran once a minute via a cron job, but over time we found two problems with this approach.

The first was that the URL for a story was not unique over time - each update got a new URL. RSS readers had trouble working our what was new content and not just a new publish of the same story. People linking to a story would get a stale version of the content, depending on when it was actually updated.

The second related to the 1 minute cycle time of the processing script. Most of the time this worked fine, but occasionally we'd get a partial publish when the script started before iNews had finished publishing. On rare occasions we'd end up with two scripts trying to process the same content.

One of the early design decisions was to use SHA1 hashes to compare content when updating. As you'll see later it made the script more flexible as we fine-tuned the publishing process. Initially the iNews exporter generated SHA1s based on the headline and bodycopy and these were stored in the spare fields in the Matrix news asset. These values could be checked to determine if content had changed.

The second task was to update the iNews exporter to generate the new XML. This proved to be a small challenge as I wanted to run the old and the new import jobs on the same content at the same time. Live content generated by real users is the best test data you can get, so new attributes were added to the XML where required to support this.

The first 3 weeks of testing were used to streamline the export script and write unit tests for the import script. I also added code to the exporter to process updates and removals of stories.

Add. This mode is simple enough - if the story was not in the system, add it.

Update. The update function used the headline of story to determine a match with an existing story on the site. We limited the match to content in the last 24 hours.

This created a problem though - if the headline was changed the system would not be able to find the original. To get around this I created the 'replace' mode. To replace a headline staff would go to the site and locate the story they wanted, capture the last segment of the URL, and paste this into the story with a special code.

In practice this proved to be unwieldy and was unworkable. It completely interrupted the flow of news processing, and we dropped it after only 24 hours of testing.

As an aside, the purpose of a long test period is to solve not only technical issues, but also operational ones. The technology should facilitate a simple work-flow that allows staff to get on with their work. The technical side of things should be as transparent as possible; it is the servant, not the master.

What was needed was a unique ID that stayed with a story for its life in the system. iNews does assign a unique ID to every story, but these are lost when the content is duplicated in the system or published. After looking at the system again, I discovered (and I am kicking myself for not noticing earlier) that the creator id and timestamp are unique for every story, and are retained even when copies are made.

It was simple matter to derive a SHA1 from this data, instead of the headline, and use that for matching stories in the import script. Had I not used a spare field in the CMS to hold the SHA1, we'd have had to rework the code.

After a couple of days testing using the new SHA1, it worked perfectly - staff could update the headline or bodycopy of any story in iNews and when published it would update on the test page without any extra work.

This updated process allowed staff to have complete control over the listing order and content of stories simply by publishing them as a group. If only the story order was altered, the updated time on the story was not changed.

It has worked out to be very simple, but effective.

Kill. To kill a story a special code is entered into the body of the story. The import script sets the mode to kill and the CMS importer purges it from the system.

Because of the all the work done on the iNews export script, I decided to fix the issues mentioned above - partial publishes, 1 minute cycle time, and two scripts working at once.

The new script checks for content every 3 seconds, waits for iNews to finish publishing, and uses locking to avoid multiple jobs clashing. I'll cover the gory details of the script in a later post.

And work continues to make the publishing process even simpler - I am looking at ways to remotely move content between categories and to simplify the process to kill items.

We use the MySource Matrix CMS, and categorising content is a trivial exercise using the built in asset listing pages. The tricky part in our case is that none of our news staff actually write their content directly in the CMS, or even have logins. Why?

When we first started using Matrix, the system's functionality was much more limited than it is today (in version 3.18.3). For example, paint layouts - which are used to style news items - had not yet been added to the system.

The available features were perfectly suitable for the service that we were able to offer at that time though.

The tool used by our journalists is iNews - a system optimised for processing news content in a large news environment - and it was significantly faster than Matrix for this type of work (as it should be). Because staff were already adept at using it, we decided to use iNews to edit and compile stories and export the result to the CMS.

This would also mean that staff didn't have to know any HTML, and we could add simple codes to the text to allow headings and other basic web formatting. It would also dramatically simplify initial and on-going training.

The proposed process required two scripts. The first script captured the individual iNews stories, ordered them, converted the content to HTML, and packaged them with some metadata into an XML file. (iNews can output a group to stories via ftp with a single command).

The XML was copied to the CMS server where script 2 imported the specified content.

Each block of stories was placed in a folder in the CMS. The most recent folder represented the current publish, and was stamped with the date and time. The import script then changed the settings on the site's home page headline area, the RSS news feed and the News home to list the stories in this new folder.

Stories appeared on the site as they were ordered in the iNews system, and the first five were automatically displayed on the home page.

On the site, story URLs looked like this:

www.radionz.co.nz/news/200807221513/134e5321

and each new publish replaced the any previous versions:

www.radionz.co.nz/news/200807221545/2ed5432

On the technical side, the iNews processing script ran once a minute via a cron job, but over time we found two problems with this approach.

The first was that the URL for a story was not unique over time - each update got a new URL. RSS readers had trouble working our what was new content and not just a new publish of the same story. People linking to a story would get a stale version of the content, depending on when it was actually updated.

The second related to the 1 minute cycle time of the processing script. Most of the time this worked fine, but occasionally we'd get a partial publish when the script started before iNews had finished publishing. On rare occasions we'd end up with two scripts trying to process the same content.

The Update

The first thing we had to do was revise the script for importing content. This work was done by Mark Brydon, one of the developers at Squiz. The resulting script allowed us to:- add a new story at a specific location in Matrix.

- update the content in a existing story (keeping the URL).

- remove a story

- put stories into a folder structure based on the the date.

One of the early design decisions was to use SHA1 hashes to compare content when updating. As you'll see later it made the script more flexible as we fine-tuned the publishing process. Initially the iNews exporter generated SHA1s based on the headline and bodycopy and these were stored in the spare fields in the Matrix news asset. These values could be checked to determine if content had changed.